Back to Fred Mac Donald's Blog

Why I need a site map and how to create a site map?

Why do I need a site map and how do I create a sitemap that google and other search engines can access? This entry also discusses how to let google know you have submitted a sitemap and invite them to come and visit your website.

Much has been said in the past about site maps and how effective they are. I the “good old days” when the internet was small it was relatively easy to get your website pages indexed by the different search engines.

In “todays internet” things are a bit different. 1000’s of pages are published every minute and you need to compete with each of those publishers for a bit of “bot time” from the search engins to come and look at your new page to see if it is worth the effort to add it to the search engine index.

What is a “site map”?

In short, a “site map” is a file or document that list all your pages on your website with the correct Page Titles, URL and other relevant information that would allow a search engine bot or spider to easily and effectively crawl your website looking for new pages.

If you have a large website with lots of pages, articles or stock items but no site map, a bot could find one of your pages, index it and them follow any links you might have on that page to the next page and so on. If you rely on this method and have more than one link on a page, what links is the bot going to follow? It is even going to come back to the initial page and follow the other link?

Having a proper dedicated site map, gives the bot a central place to start the indexing from. The format naming and location of the site map is recognised by all the search engine bots and drastically improve your chances of getting your pages into the search engine indexes. It also serves as a reference of “importance” of a page as well as the last time it was updated.

How do I create a site map?

A quick google search will give you an idea of how often this topic is discussed

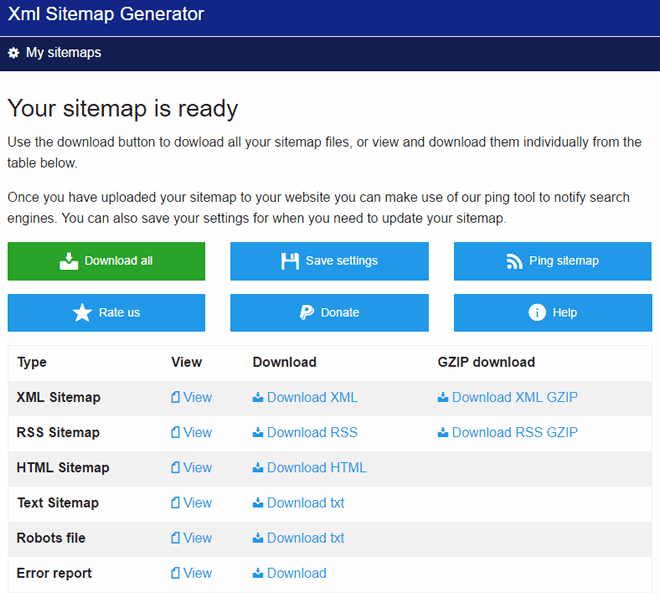

I have recently discovered a HTML and Google Xml Sitemap Generator that does the job pretty good. As a bonus it is simple enough to use by someone that is not a web-master yet flexible and powerful enough to be used by someone that is a webmaster and best of all it is free. You have the option to use it online or download a desktop version that can connect to your hosting account and upload the created site maps for you.

A couple of things I find really useful with the XML Sitemap Generator is;

- it actually tells you where the problem is if it can not index or crawl your site

- it creates 6 different site maps you can use

- it allows you to “ping” the search engines to let them know you have new or updated site maps

- you can define what file extensions to include

- you can define what query string parameters to exclude

- you can define what images to include or exclude all images from the map.

- you can use filters to exclude any folders and URL’s from the map

This is useful to exclude login pages and calendar days, months and years… - on completion it generates a error report you can download to see if anything is not indexable or if you have any dead links

What do I do with the site map?

If you are unlucky enough to not use XMS Systems you will have to figure out how to work an ftp client and upload the site maps to the root of your website.

You also will need to make sure the suggested content of the “Robots.txt” file in above screenshot is added to your robox.txt file on your website.

Once you have your site maps uploaded and they are accessible via your browser, you can log into “Google Search Console” to list your site map and verify that Google can access the site map.

Keep in mind that there is no guarantee that Google or any of the other search engines will index all the pages you submit for index via your site map. Their algorithms check for a number of things to determine if a page is “worthy” to be indexed.

One of the main guidelines to this is simple this: “Does the page add value to the internet by supplying relevant quality information that is useful to the community?”

I keep on telling my clients that you need to qualify your product and convince Google that your version of a specific product is better that the exact same product your competitor is selling. Search engines are not going to index your product above your competitors because they like you. As far as Google is concerned a shoe is a shoe… The quality and relevance of the information you give with your shoe is one of the aspects that will get you ranking higher in the search engine result pages.

Using Site maps with XMS Systems